Home » Learning Curve

Nothing is real, nothing to get hung aboutA look at virtual memory methodology and other things.

Now that solid-state (SSD) technology is rapidly replacing hard disk (HDD) technology, a lot of the criticism of virtual memory methodologies is moot, but as hard drives will still play a role for some time to come, the matter should still be discussed. There are a lot of legacy Macs out there, and they have the good old trusty disk drive that's been a part of the PC revolution from the beginning. And sometimes they're in pain.

Or almost from the beginning. As early Apples, IBM's original PC did not have a hard drive. But the PC XT, released afterwards, did. It had a 'Winchester' hard drive with a walloping 10 MB disk space.

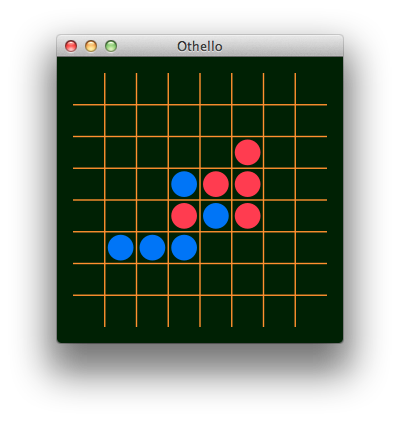

Unix was developed on PDP machines from Digital Equipment Corporation - machines so lacking in 'real' memory (or 'random access memory' or 'RAM') that the board of IBM reportedly laughed when they first read the specs. (IBM mainframes at the time had only 1 MB RAM, but even so.) The key was time-sharing and use of virtual memory.

Time-sharing is relatively easy to explain without getting too technical: it's the way a single computer can handle multiple terminals at the same time, much like modern personal computers handle multiple processes at once. Virtual memory is a bit more difficult to explain, and represents the most of this discussion.

The basic tenet of virtual memory is that computers can use up their real memory. Real memory is too expensive (and disks are comparatively cheap). When a computer runs out of real memory for all the programs it's running, it can cheat by using allocated disk space as if it were real memory.

But real memory is easy to access - and fast too: it's right there, either on the mobo or close by, just across a bus.

Disk memory used as real memory is not fast. CPU speeds are measured in nanoseconds (billionths of a second). Disk speeds (median access times) are measured in milliseconds (thousandths of a second). That's an order of magnitude where the one can be one million times as fast as the other.

Being that hard drives are so slow, Ken Thompson used a type of disk output today known as 'lazy write'. The idea was to not jitter the disk controller (analogous to the tone arm of an old phonograph) unnecessarily. The controller moves in and out, from the perimeter to the centre and back again, over cylinders (hard drives are normally like a stack of pancakes with several platters on top of each other). The idea with lazy write is to change the cylinder location as little as possible between operations, both to cut down on wear and tear and to increase efficiency. So Ken's idea was to use callback or cached data to effect output: the disk controller asks for a new write operation, and the kernel, using the current cylinder location, responds with a suitable new write operation as close to the last as possible.

[Yes this is why one should never just 'shut down' a Mac - write ops can still be pending. Ed.]

Original Unix ran on teletype machines. No need for memory for graphical applications. Commands were thankfully cryptic and responses more so if possible. The now-accepted paradigm of 'no news is good news', so violated by wordy Microsoft, was also key to efficient use.

[Ken called the move operation 'mv', the copy operation 'cp', the list operation 'ls', and so on. When asked if he'd do anything differently, he said he'd put an 'e' on the end of the file API 'creat'. Ed.]

Not much happened in realtime back then, beyond waiting for programs to launch, initiate, and run. Multitasking within a single user environment wasn't much of an issue.

But things changed. Apple came out with the Mac in 1984, Microsoft scrambled to compete with their own GUI, and so forth. And GUIs demand a lot of memory.

Virtual memory systems can be seen in one of two ways. The first way is to simply see virtual memory as an extension of real memory that's used when needed. The system will allocate disk space as needed and then put things in there. And so on. This is the basic way the Unix VM system works, and the basic way Apple's VM system for OS X works to this day.

A fresh boot of a Mac will give you a starting VM file of 64 MB or thereabouts. You can find this file in /private/var/vm. As things go on, and as the system finds it needs more and more virtual memory, additional 'swap files' are created. The first two are usually of the same size; after that, file sizes increase geometrically.

-rw------T 1 root wheel 4294967296 Aug 21 05:22 sleepimage

-rw------- 1 root wheel 67108864 Aug 19 14:02 swapfile0

Resource hogs - apps that use a lot of memory - are what will slow a Mac down. And if you're especially unlucky, you'll see that spinning beach ball that was otherwise made extinct with the release of Jaguar 10.2.

But things can get much worse. A beach ball spinning for a second or two is impolite of an OS, but it's no big deal and quickly forgiven; a beach ball spinning for a significant part of a minute or more is not. Not when everything else on the desktop freezes, not when programming guidelines (eg the 'user in control' principle) frown on 'waits' longer than 2.5 to 3 seconds.

What's happening behind the scenes when this happens? The overtasked virtual memory system is writing data in a frenzy. And because disk operations run by root can take such a high priority, and because the kernel as a whole needs the memory restored and correctly aligned before proceeding, everything goes out to lunch . And you're stuck with a seemingly endless beach ball.

Why so much swapping and so many internal disk writes are necessary is a mystery solved only by studying the source code and studying the overall logic, but it's clear the methodology is a failure. To see what could possibly be done, one might cast a glance back to those archaic PDP machines and the man who finally delivered on their OS.

Unix came about because Ken Thompson succeeded in getting Bell Labs to purchase two DEC computers, this at a time when outlays were mostly stopped, but the PDP computers were delivered without an operating system (promised 'real soon now').

Ken and dmr spent a lot of time discussing how they hoped the coming operating system would work, until a colleague tired of listening to them and told them to write their own flipping system instead. The system they invented was of course Unix, and the system that arrived too late was written by Dave Cutler of DEC. Dave Cutler went on to join Microsoft years later, give them their 32-bit Windows NT and successive systems, and generally rub the face of Bill Gates around in the Redmond dirt.

Dave was a self-made programmer and system architect. He learned by doing. He was legendary at DEC and equally so at Microsoft, where he worked mostly with his old 'tribe' of programmers from a DEC research lab in Seattle. And he didn't take shit from anyone. Dave's idea of virtual memory turns the original concept on its head.

The classic idea of virtual memory is 'take to disk when real memory runs out'. Dave's idea is to use disk memory first and foremost and only 'take to real memory when it's needed'. This is possible because of a processor interrupt known as a page fault.

An interrupt is, as its name implies, something fed to a CPU that interrupts. Because there might be something more urgent to do. Some interrupts can be ignored for a time, but the most severe, known as non-maskable interrupts, cannot. The page fault is such an interrupt.

Contrary to what its name seems to imply, a page fault is not a mistake - it means only that a page (usually a 4 KB chunk) of memory that the kernel was expecting is in fact not where it should be. To fully understand this, one needs to look further at virtual memory before proceeding.

Nothing is real, nothing to get hung about

Virtual memory is more than an extension of RAM. Virtual memory also protects the system from freaky code (wild pointers etc) and protects its devices as well. Processors admit of two modes of operation: user mode (one often hears reference to 'user land') and kernel or privileged mode. The basic idea is that 'nothing is real but there's nothing to get hung about'.

Ordinary applications cannot be allowed access to system hardware. There are other applications running that they might screw up. Essential to running such a system is that ordinary application software must not be aware that anything has changed. When an application in 'user land' accesses a variable at such-and-such an address, it has to think it's asking for a real address.

But of course it's not. The memory access goes through the CPU and kernel where the address (address range) is looked up in what are known as page tables. These are internal bookkeeping used by the kernel to make sure that everything is nice and tidy and all the children play well together.

The address asked for (a virtual address) is looked up; the system finds the real address corresponding to that virtual address (address range); and then the fun starts.

It can happen that the real memory used for the application has been overwritten for use by another process. This is where a page fault will occur. The CPU is signalling that the 'page' of memory is not available.

This 'fault' is run as a so-called exception. And it's 'caught'. The system then has the opportunity to restore the missing page before the CPU tries to run the code again.

And so forth.

So the general rule: when an operating system asks for memory that's not there, a page fault is issued, and the system recovers by reading the missing page back into memory, and then trying again.

Once more: page faults do not represent errors. The number of page faults on your Mac at any one given moment after boot can be in the tens or hundreds of millions. Page faults are not an error.

Dave Cutler's idea of virtual memory is based on exploiting page faults. Many Unix systems, including OS X, do so as well, but Dave's idea seems to go a bit further.

Dave didn't see a point in starting a system with a meagre swap file - instead, make sure there's enough swap available on boot to represent every single last byte in RAM. This is not unreasonable: Macs can have RAM in multiples of 4 GB, but hard drives are usually one hundred times that size. Losing 4 or 8 or 16 GB to an on-disk swap file is no tragedy. And the way Apple's OS X runs, you're going to get there sooner or later anyway.

Comparing apples and oranges in this way can seem futile (and often is) but for all the bad things in a Microsoft system (too numerous and serious to seriously contemplate use) the virtual memory system there runs a lot better. Windows systems can crash and hang all over the place, but getting a drag because the VM system starts tripping over its own feet: that's much less likely to happen.

Both Cutler and OS X go further still in implementing virtual memory. The old Microsoft documentation for Cutler's 'CreateProcess' API stated that the function is nearly instantaneous. And that was correct - all that was done was a bit of bookkeeping in real memory. The fun started instead when the app was to run.

Here's another cool trick: executables are divided into a number of sections, with one for data, another for 'text' (code) and so forth. The text (code) section isn't going to change when the program runs, but the data section of course will.

So systems load applications by assigning the virtual memory of an executable image to its actual file on disk. That makes program loads very fast. But as the data will change as things progress, data sections are marked with the flag 'copy on write'.

Copy on write means that the data will be copied to a new location before a write operation. Copy to where? To the ordinary system virtual memory area.

You can test this and other concepts for yourself. An executable image is loaded into memory as page faults demand it. (NIBs are not loaded until directly invoked.)

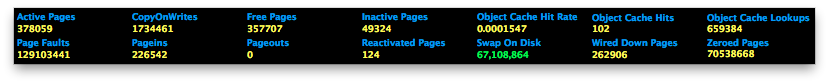

Take a Cocoa application of your choosing and copy it in toto to another location. (The example used here is Othello, seen onscreen to be running admirably with no executable image on disk and even its parent subdirectory 'MacOS' removed. Remove the 'Resources' subdirectory, however, and you'll soon run into trouble, even though you can still go on playing the game.)

The data section was copied to ordinary virtual storage as soon as something needed to be written to disk, and so the VM backup now resides somewhere else; the text (code) section is already in memory in toto. Piece of cake.

What can be done to speed up legacy Macs? Not much, as there's no way Apple will do anything with that old code. The error, if any, isn't Apple's doing anyway - it's the FreeBSD team who need, in such case, to look at it.

But by making sure a system has enough virtual storage, right from the start, for every byte of RAM, and by revising algorithms to cut down or completely eliminate disk thrashing when cleaning up VM, things could have been a lot better.

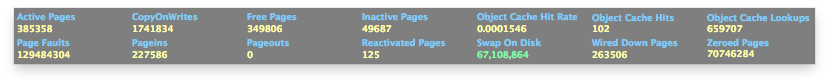

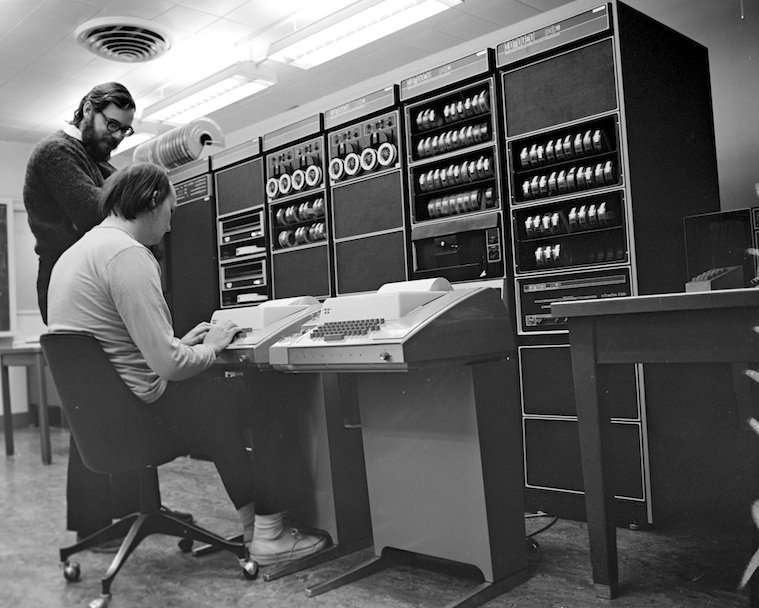

New systems with SSDs instead of HDDs will hardly notice a thing. No more beach balls. Hopefully. Safari is one of the worst resource hogs seen on most Macs; it's not impossible to remove all memory leaks; the ACP went through a major audit a year back, so that not a single app leaks memory anymore. Many or most of those leaks aren't caused by errors in application code but by Cocoa APIs themselves. The task then became one of fiddling with these Cocoa APIs until ways were found to skip around the leaks. This can ostensibly be done. So then it's not overly demanding of a team at Apple, with all the source code available, to accomplish the same thing. Yet for release after release, Safari leaks like a sieve, with certain versions almost unusable. Here's a report on a Safari recently launched.

Process 17992: 343 leaks for 106864 total leaked bytes.

Process 17993: 8435 leaks for 913992 total leaked bytes.

Nearly 9,000 memory leaks for such a short run is simply unacceptable, and yet this version of Safari is one of the better-behaved.

As memory fills up, as more and more resources are saved and stored in more and more weird locations, something eventually breaks. And you see that spinning beach ball artifact all over again.

The best thing you can do is just kill the fucker. Start it again to remove all cookies and assorted cruft, then exit and start again to make sure it's all gone. Then do a proper clean of on-disk caches, including your own hive in /var/folders, then reboot.

Again, new Macs with SSDs won't need this as much. The system can expand virtual memory as much as before, but those millisecond media access times are gone.

See Also

Swapwatch: Spin Ball Spin

|